**The Last Algorithm: A Potential Breakthrough in AI**

As I sit here reflecting on the rapidly evolving field of artificial intelligence, I'm filled with an unsettling sense that we're on the cusp of something extraordinary – a development that will rewrite the rules and propel us toward a future that's both thrilling and unpredictable. My intuition is that 2026 may be the year when AI reaches an inflection point, yielding outcomes akin to Artificial General Intelligence (AGI) or more accurately, Artificial Super Intelligence (ASI). But this isn't expected from a new, revolutionary model; it's about embracing a fundamental principle – the foundational algorithm, which I've come to call "The Last Algorithm." This concept deserves a name that sparks curiosity and excitement, much like the discovery of alien life in our galaxy. When we encounter extraterrestrial civilizations and ask them about the milestone that propelled us toward this point, they might respond with a nod, acknowledging it as the culmination of humanity's collective effort.

The Ralph Loop, conceptualized by Geoffrey Huntley, is an early indicator pointing towards this potential breakthrough. I first mentioned it in February 2025 in my post "AI's Ultimate Use Case: Transition from Current State to Desired State." Since then, I've written numerous pieces exploring the idea but always with a note of caution due to its complexity and seriousness. Yet, I feel that the world is peculiar enough for groundbreaking concepts like this to slip through the cracks unnoticed.

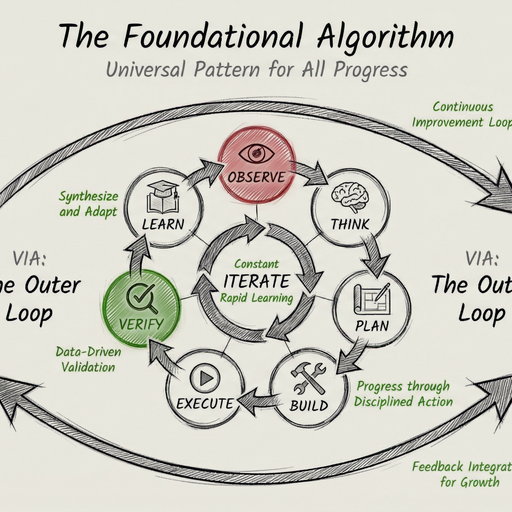

My issue with loops like Ralph's lies in their limited scope. They're too focused on features and code, grinding at a microscopic level. My approach, currently in early alpha within my PAI skill, takes a broader view. It's about creating an algorithm that can address any problem, not just specific domains or tasks. This is what feels tractable – making it possible to achieve euphoric surprise across the trillions of nano and micro problems humans face daily.

The concept hinges on capturing the ideal state, which serves as both a goal and verification criteria. It's about enhancing this state through experimentation and iteration, making things measurable and granular so that we can properly hill-climbing towards it. The Ralph Loop and similar projects are brilliant but tightly scoped to specific domains; my algorithm aims for generality.

A conversation with Andrej Karpathy significantly influenced my current version of the idea. He noted the shift from Software 1.0, which was about writing software, to Software 2.0, focusing on verifying it. I see this as a universal principle – verifiability is the key to making things work at scale. It's not just limited to software but can be applied to any goal or problem.

On a speculative note, I'm predicting that continuous algorithm approaches will generalize into universal problem solvers by 2026. They don't have to work perfectly; even minor improvements could jump us ahead significantly. The world feels strange enough right now for a basic idea like this, iterated on by a community of enthusiasts and hackers, to move the state of the art forward.

While acknowledging the high risks and uncertainties involved, I believe there's a 50-75% chance that "The Last Algorithm" holds potential. It could yield major fruit above current agentic harnesses even if it's not perfected. The challenge now is to verify this concept against reality, with experimentation, iteration, and verification being the keys to unlocking its full potential.