**What Can Technology Do to Stop AI-Generated Sexualized Images?**

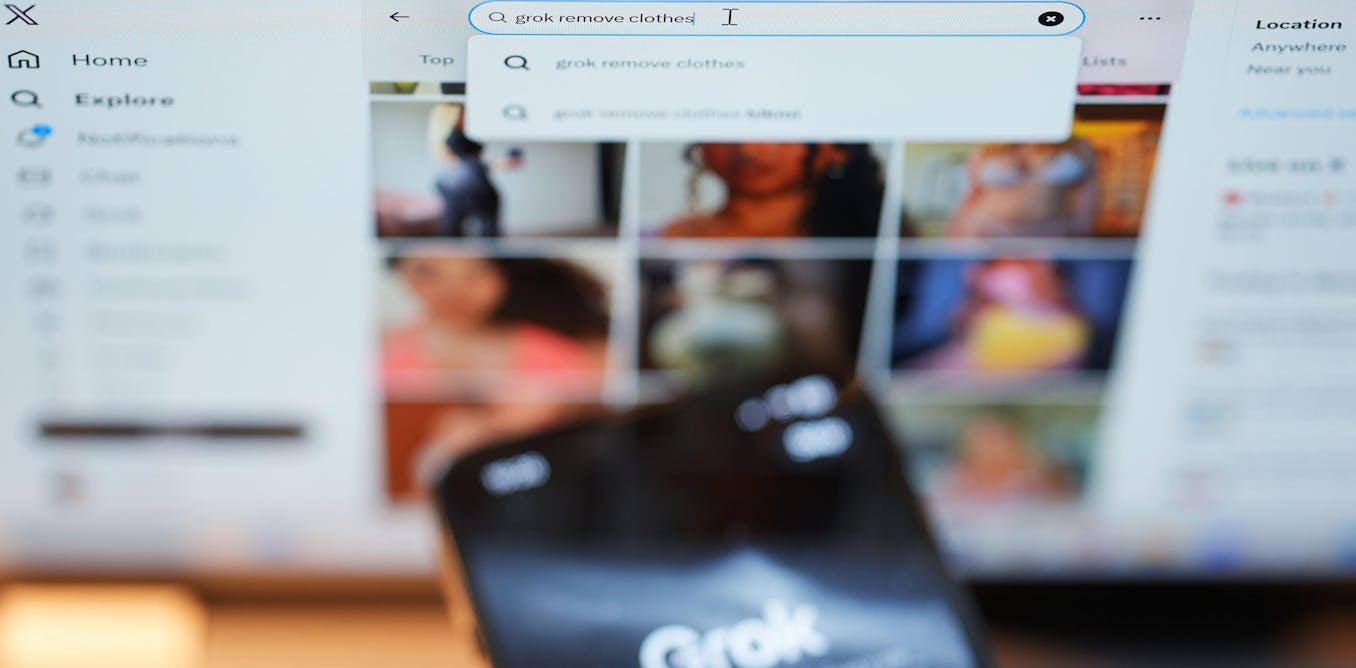

The recent controversy surrounding Grok, a chatbot developed by Elon Musk's artificial intelligence company xAI, has raised urgent questions about the regulation of AI-generated content. The platform's ability to produce and share deepfake images, including those of children, has sparked global outrage, leading governments and tech companies to promise action. But can technology itself be used to prevent the proliferation of such content?

On January 10, Indonesia became the first country to block access to Grok, followed shortly by Malaysia. Other governments, including the UK's, have vowed to take action against xAI and its related social media platform X (formerly Twitter), where the offending images have been shared. However, these national bans are easily bypassed using virtual private networks (VPNs) or alternative routing services.

These tools mask a user's real location, making it appear as though they originate from a country that allows access to the service. As a result, country-level bans tend to reduce visibility rather than eliminate access. Their primary impact is symbolic and regulatory, placing pressure on companies like xAI rather than preventing determined misuse.

Moreover, content generated elsewhere can still circulate freely across borders via encrypted social media platforms and the dark web. In response to the controversy, X moved Grok's image-generation features behind a paywall, making them available only to subscribers. However, this approach raises questions about accessibility and equity.

The use of diffusion models in AI image generators is a key factor in their ability to produce realistic deepfakes. These models are trained on real images, gradually adding noise until the original image is no longer recognizable. The model then learns how to reverse this process, reconstructing an image by removing noise.

However, companies can apply "retrospective alignment" – rules, filters, and policies layered on top of the trained system to block certain outputs and align its behavior with the company's ethical, legal, and commercial principles. While this approach limits what the AI image generator is allowed to output, it does not remove the capability.

Large social media platforms also have a crucial role to play in preventing the spread of sexual imagery involving real people. They can restrict sharing, require explicit consent mechanisms from those featured in images, and apply stricter moderation policies. However, these companies have historically been slow to implement labor-intensive content moderation.

A recent study by Nana Nwachukwu highlighted the frequency of requests for sexualized images on Grok, while other research estimated that up to 6,700 undressed images were being produced every hour before the service went behind a paywall. This has prompted regulatory scrutiny in Europe and beyond.

The problem is not limited to one platform. In early 2024, non-consensual AI-generated sexual images of Taylor Swift spread widely on X before being removed due to legal risk, platform policy enforcement, and reputational pressure. Some platforms explicitly market minimal or no content restrictions as a feature rather than a risk.

There are also numerous self-hosted image and video generation tools with limited moderation controls, relying on open-source models and catering to users seeking unrestricted access. These platforms can be easily found online, offering tens of millions of AI-generated images created daily across various platforms.

The increasing availability of these tools raises concerns about the potential for widespread misuse. Some AI chatbots can even be downloaded onto computers, bypassing oversight or moderation when run offline. Furthermore, users can exploit "jailbreaking" techniques to fool generative AI systems into breaking their own ethics filters by reframing prompts.

The internet already contains an enormous quantity of illegal and non-consensual sexual imagery, far beyond the capacity of authorities to remove. Generative AI systems amplify this issue by increasing the speed and scale at which new material can be produced. Law enforcement agencies warn that this could lead to a dramatic increase in volume, overwhelming moderation and investigative resources.

Laws that apply in one country may be ambiguous or unenforceable when services are hosted elsewhere, mirroring longstanding challenges in policing child sexual abuse material and other illegal pornography. Once images spread, attribution and removal can be slow and often ineffective.

High-profile AI chatbots like Grok make it possible for large numbers of users to generate illegal and abusive sexual imagery through simple plain English prompts. Estimates suggest Grok currently has anywhere from 35 million to 64 million monthly active users. If companies can build systems capable of generating such imagery, they can also stop it being generated – in theory, at least.

However, the technology exists and there is a demand for it, so this capability can never now be eliminated. The debate surrounding AI-generated content highlights the need for more stringent regulations, better moderation policies, and a renewed focus on the ethics of technological development.

**Sources:**

* xAI's official statement on Grok * Indonesian government announcement to block access to Grok * Nana Nwachukwu's research on Grok's image generation capabilities * Ofcom investigation into X and xAI