**ATM Jackpotting Ring Busted: 54 Indicted by DoJ**

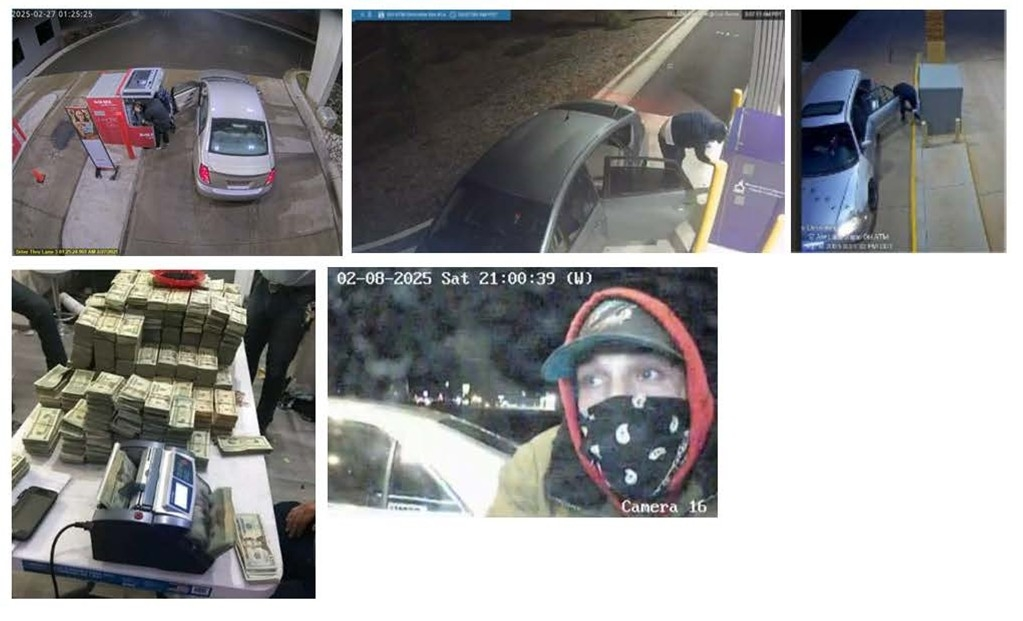

The U.S. Department of Justice has announced a major breakthrough in the fight against cyber-enabled bank robbery, indicting 54 individuals for their involvement in a multi-million-dollar ATM jackpotting scheme.

The case, which links the crimes to the notorious cybercrime group Tren de Aragua, includes charges of fraud, money laundering, and material support to a terrorist organization. According to authorities, the group carried out coordinated burglaries, laundered the stolen money, and used part of the proceeds to support criminal and terrorist activities.

ATM jackpotting is a sophisticated form of cyber-enabled bank robbery in which criminals infect an ATM with malware or use physical access to force it to dispense cash on demand. Instead of stealing cards or PINs, attackers break into the ATM's internal system, usually by opening the cabinet, connecting a device, or replacing the hard drive. Once inside, they run malicious software that sends unauthorized commands to the cash dispenser, causing the machine to "jackpot" and release all available money.

The Ploutus malware, used in this scheme, was designed to issue unauthorized commands associated with the Cash Dispensing Module of the ATM, forcing withdrawals of currency. The malware also had the ability to delete evidence of itself, making it difficult for bank employees to detect the attack.

According to the DoJ's press release, the accused individuals, including Jimena Romina Araya Navarro, an alleged leader of Tren de Aragua, face sentences ranging from 20 to 335 years in prison if convicted. The case highlights the increasingly sophisticated nature of cybercrime and the need for law enforcement agencies to work together to dismantle these networks.

Nebraska law enforcement agents played a crucial role in identifying this vast international criminal network and following the money trail back to its terroristic roots in Venezuela. United States Attorney Lesley Woods praised the tireless efforts of Nebraska law enforcement, stating that "this case demonstrates what state, federal, and local law enforcement officers can accomplish when they fully join forces."

The cases fall under the Homeland Security Task Force initiative, which unites U.S. agencies to dismantle cartels, gangs, and transnational criminal networks, with a strong focus on protecting children and prosecuting the most dangerous offenders.

**Timeline of Events:**

* 2013: Ploutus malware first spotted in Mexico * 2025: Nebraska charges 67 alleged TdA members with crimes ranging from bank fraud and money laundering to child sex trafficking and computer offenses

**Key Players:**

* Jimena Romina Araya Navarro, alleged leader of Tren de Aragua * Lesley Woods, United States Attorney

**Relevant Agencies:**

* U.S. Department of Justice * Nebraska law enforcement agencies * Homeland Security Task Force