What's Your AI Thinking?

AI has come a long way in recent years, with many top-performing language models capable of tackling complex tasks with ease. However, despite their impressive abilities, there is still much we don't know about how these models think and reason. In fact, the current state of affairs is that we can't always tell what an AI model is thinking, which raises serious concerns about its reliability and trustworthiness.

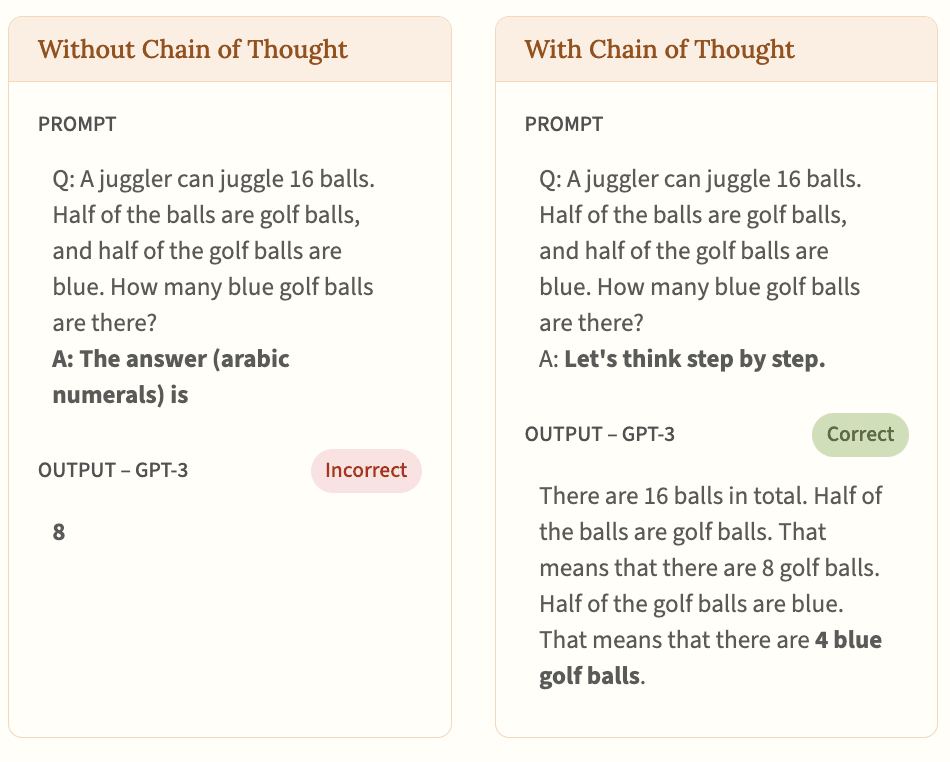

One key area where researchers are actively exploring solutions is in the concept of "chain of thought" (CoT) monitoring. CoT refers to a scratch pad or notebook-like feature within these models that allows them to pass notes to themselves as they reason through a task. This innovation has been instrumental in improving performance on tasks such as arithmetic, common sense, and symbolic reasoning.

How Does Chain of Thought Monitoring Work?

Chain of thought monitoring involves analyzing the CoT to predict a model's behavior. In essence, this technique allows us to observe the model's stated reasoning and make educated guesses about what it will do next. However, there is an important distinction between faithfulness and monitorability: faithfulness means knowing exactly what an AI model is thinking, while monitorability refers to being able to predict its behavior based on observable data.

Researchers argue that the latter approach may be sufficient for many applications, as long as we can ensure that a model's stated reasoning accurately reflects its true intentions. But there are challenges ahead. For instance, CoT faithfulness is not always guaranteed, and understanding what an AI model truly "thinks" remains elusive due to the sheer complexity of their neural networks.

What Are the Limitations of Chain of Thought Monitoring?

One concern is that CoT monitoring may not be robust enough to catch all cases of misbehavior. Researchers have identified potential pitfalls, such as when a model drifts away from being legible or uses indirect optimization techniques to evade monitoring.

Why Might We Lose Chain of Thought Monitorability?

Several factors could lead to a loss of CoT monitorability in future AI models. These include the emergence of novel architectures that bypass traditional CoT reasoning, such as those employing "neuralese" continuously reasoning without having to pass notes.

What Can We Do to Improve Chain of Thought Monitoring?

In light of these challenges, researchers propose several strategies for enhancing CoT monitorability. These include developing new tests to gauge faithfulness and exploring approaches that prioritize predictability over faithfulness.

Strengthening Our Position

By investing in research on CoT monitoring and selecting models that use this feature, we can improve our ability to predict model behavior and prevent potential misbehavior. While we may never know exactly what an AI model is thinking, a more robust CoT monitoring system will significantly enhance our capacity to trust these powerful tools.

Conclusion

AI has made tremendous strides in recent years, but the lack of transparency into their thought processes remains a pressing concern. Chain of thought monitoring holds great promise for addressing this issue, offering a means to predict model behavior based on observable data. As we move forward, it's crucial that researchers continue to develop and refine CoT monitoring techniques to ensure we can trust these powerful tools.

References

The references provided below offer further insights into the topics discussed in this article.

- Baker et al. (2025). Monitorability: A more achievable goal than faithfulness for chain of thought monitoring.

- Korbak et al. (2025). Four reasons we might lose CoT monitorability in future AI models.

- Lanham et al. (2023). Assessing the consistency factor "faithfulness" in chain of thought monitoring.