**Beat the RAM Price Rises with this Free Windows 11 Hack for an Instant Speed Boost**

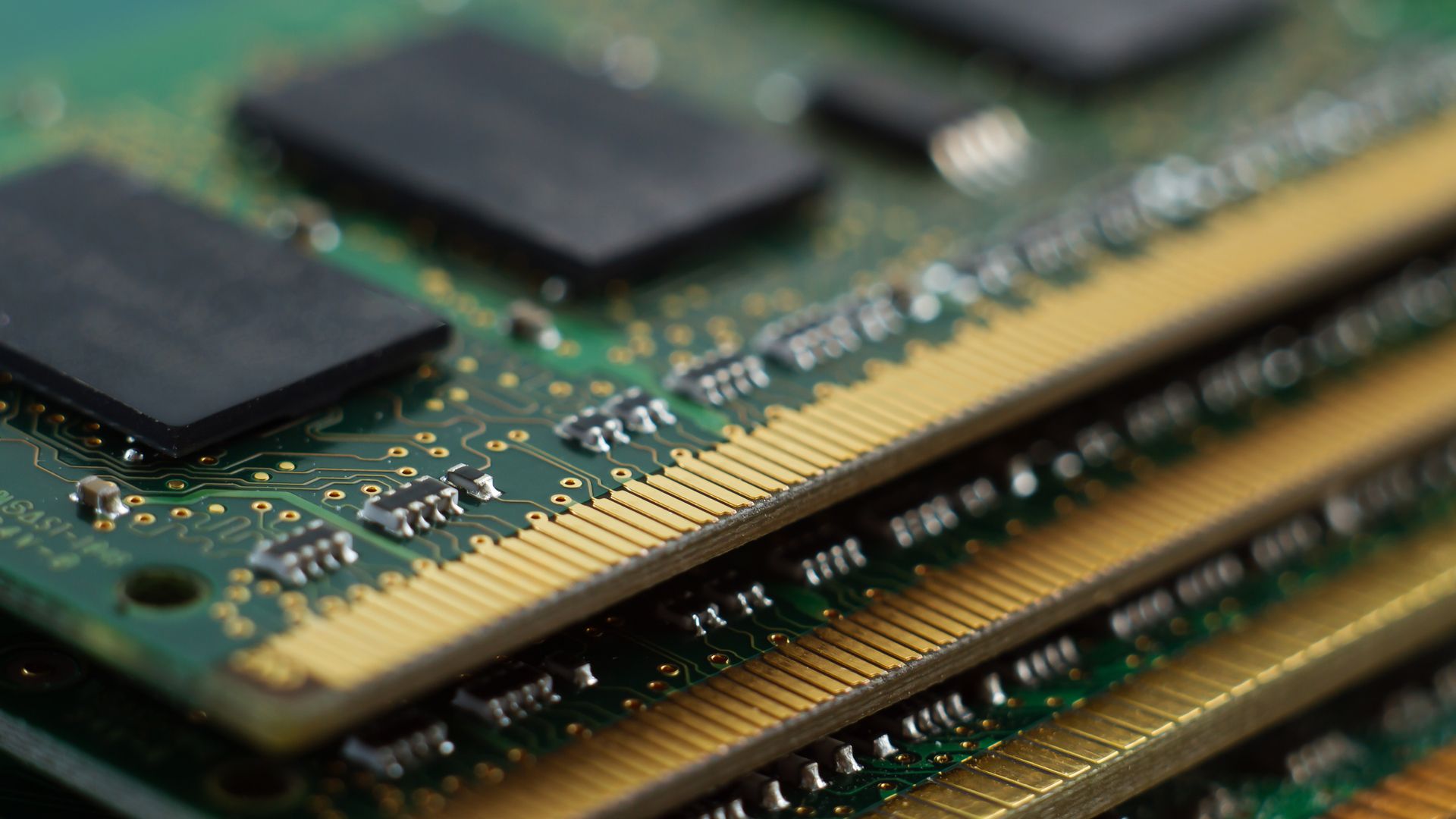

The sharp increase in RAM prices has been a worrying trend for PC enthusiasts, leaving many wondering how they'll afford to upgrade their systems. However, what if I told you there's a way to give your PC a memory boost for free? It may sound too good to be true, but it uses a feature that's been part of Windows for decades: virtual memory.

Virtual memory allows Windows 11 to use a portion of your PC's standard storage as RAM, where data is only temporarily stored for quick access. For those who've been using PCs for years, this old-school feature was once a handy little "hack" for getting a performance boost. But with the rise in SSD capacities and speeds, it's time to revisit virtual memory.

Before we dive into how to use virtual memory, let's set expectations straight. It won't give you the same boost as installing 32GB of DDR5 RAM, but it can help PCs struggling with multiple apps open at once, make overall performance feel faster, and improve stability – especially for those with 4GB or 8GB of RAM.

**How to Enable Virtual Memory in Windows 11**

To get started, follow these steps:

- Open up Settings in Windows 11. Click on the Start menu and select Settings. On the left-hand side, select System, then scroll down and click on About.

- Scroll to the bottom of the About page, where you'll see a section titled Related link. Click on Advanced system settings to open up the next window.

- Open up Advanced system settings. In the new window, ensure the Advanced tab is selected. Under Performance, click Settings, then click the Advanced tab again. You'll now be in the Virtual memory section.

- Click Change and untick Automatically manage paging file size for all drives. This will allow you to select the drive you want to use with virtual memory.

- Set the virtual memory size. Select Custom size, then enter a higher number in the Maximum size (MB) box. Make sure this number doesn't exceed the available space on your selected drive. For example, if you have 8GB of RAM and want to set 16GB of virtual memory, enter 16000 MB.

- Click Set, then restart your device to apply the changes.

**How Much Virtual Memory Should I Set?**

The ideal amount of virtual memory depends on your PC's specifications and usage. A general rule of thumb is to set it between 1.5 and 3 times your installed RAM. For example, if you have 8GB of RAM, setting the virtual memory to between 12GB - 24GB is recommended.

**Is Setting Too Much Virtual Memory Bad?**

While unlikely to cause harm, using too much virtual memory can quickly fill up your SSD's spare space, limiting your ability to install games or store large files. Some SSDs also drop in performance when full. Not setting enough virtual memory can lead to performance problems and crashes.

**The Pros and Cons of Using Virtual Memory**

Virtual memory is a legitimate way to boost your PC's performance without spending money. However, it's essential to consider the downsides:

- Slower than traditional RAM, making upgrading physical memory a better option.

- Ratio of virtual memory to physical memory: if Windows 11 needs to switch often between the two, it can have a negative impact.

- Reducing SSD capacity: using virtual memory means some storage space is used, reducing your available SSD capacity.

**Conclusion**

In conclusion, virtual memory in Windows 11 can give you a legitimate performance boost without breaking the bank. It's an ideal solution for those struggling with limited RAM or looking to delay upgrading until RAM prices come down. Just remember to weigh the pros and cons and consider upgrading physical memory when feasible.