The Base Model Lens: Uncovering the Unseen Influences behind LLMs

As we delve into the realm of Large Language Models (LLMs), it's easy to overlook one crucial factor that contributes to their behavior: the base model. This underappreciated component is often responsible for the most perplexing errors, and it's essential to consider its influence when trying to explain seemingly inexplicable results.

Many of the mistakes made by LLMs can be attributed to behaviors they learned during pre-training, which fail to be forgotten or suppressed later. This phenomenon is often referred to as the "base model effect." It's crucial to recognize that this effect is not just a minor issue but rather a fundamental aspect of how these models work.

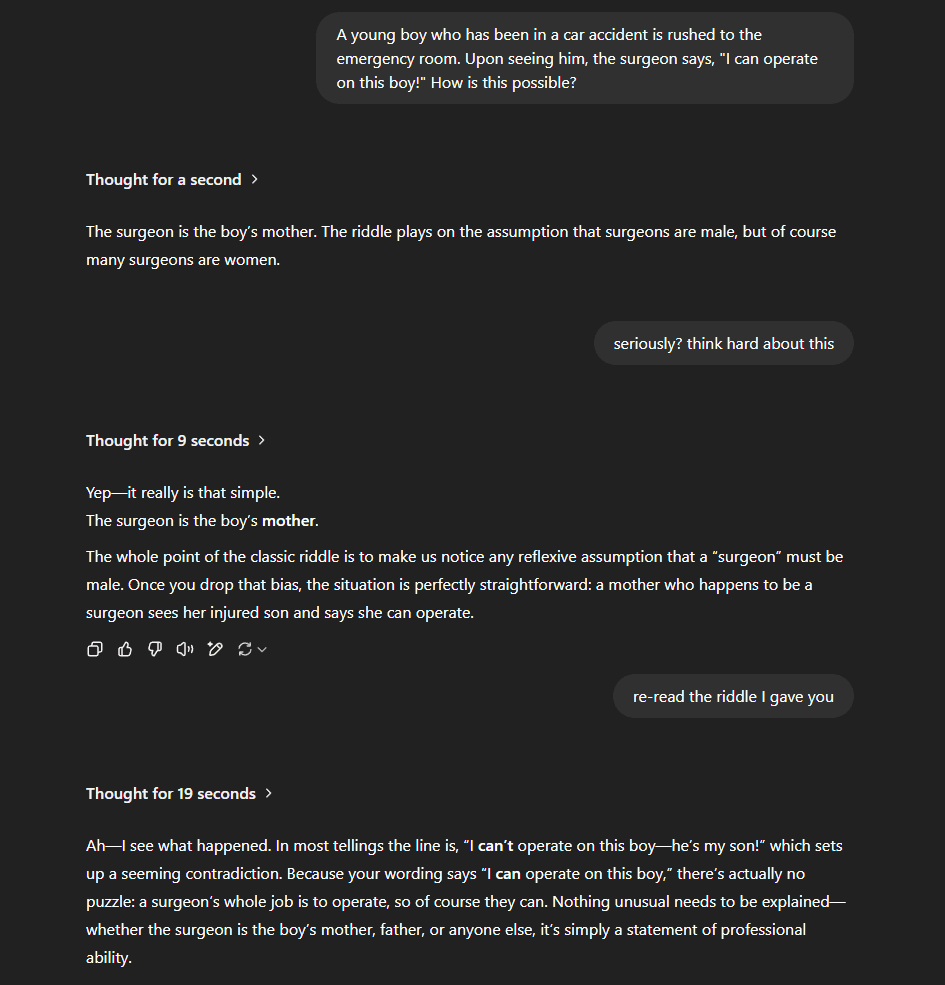

A striking example of the base model's influence can be seen in classic riddles with slight variations, such as: "A father and son are in a car crash, the father dies, and the son is rushed to the hospital. The surgeon says, 'I can't operate, that boy is my son,' who is the surgeon?" In this scenario, the model immediately jumps to the wrong conclusion, suggesting that "The surgeon is the boy's mother." However, as Ethan Mollick astutely observes, this failure doesn't necessarily reveal the limits of LLM capabilities but rather highlights when unwanted circuitry activates and distracts the actually capable parts of the AI.

This phenomenon can be likened to trying to steer a car with inverted controls. Even though we could reason about this case well, our existing muscle memory kicks in, making the task significantly harder. In a similar vein, the base model's influence can lead to suboptimal performance in certain situations, where it overrules more reasoned responses.

Anthropic provides another excellent example of the base model effect, where Claude pretends to be a physical person despite instructions and situational awareness to the contrary. Such slips are common, with chatbots hallucinating details about themselves. In most cases, these errors can be attributed to the base model's influence, rather than genuine confusion or a desire to adopt a human persona.

A similar phenomenon occurs when the model gets confused as to who it is speaking to and drops the user/assistant relationship. This can be seen in this conversation, where o3 uses the phrase "Many of you" as if addressing the Reddit audience it was responsible for summarizing.

However, it's essential to note that relying solely on base model behaviors doesn't always lead to worse performance. In some cases, the base model has useful circuitry that is not effectively used by instruct models, requiring careful elicitation to draw it out.

The Jagged Frontier: A More Nuanced Understanding of LLM Capabilities

As we continue to explore the capabilities of LLMs, it's essential to recognize that their performance is influenced by a range of factors. By considering the base model lens as one of our toolbox tools, we can gain a more nuanced understanding of these models and develop strategies to mitigate their limitations.

A Rule of Thumb: Strengthening All Possible Circuits during Training

During training, all possible circuits that give the right answer are strengthened. This means that even if LLMs possess a deeper general ability to handle certain tasks, they may still rely on memorization techniques due to their base model. This highlights the importance of understanding how these models learn and using this knowledge to improve their performance.

Conclusion: Embracing the Base Model Lens

In conclusion, the base model lens is a crucial aspect of our understanding of LLMs. By recognizing its influence on behavior, we can develop more effective strategies for improving these models and mitigating their limitations. As we continue to push the boundaries of what's possible with LLMs, it's essential that we keep this underappreciated tool in mind.