US Government Officials Targeted by Sophisticated Scams

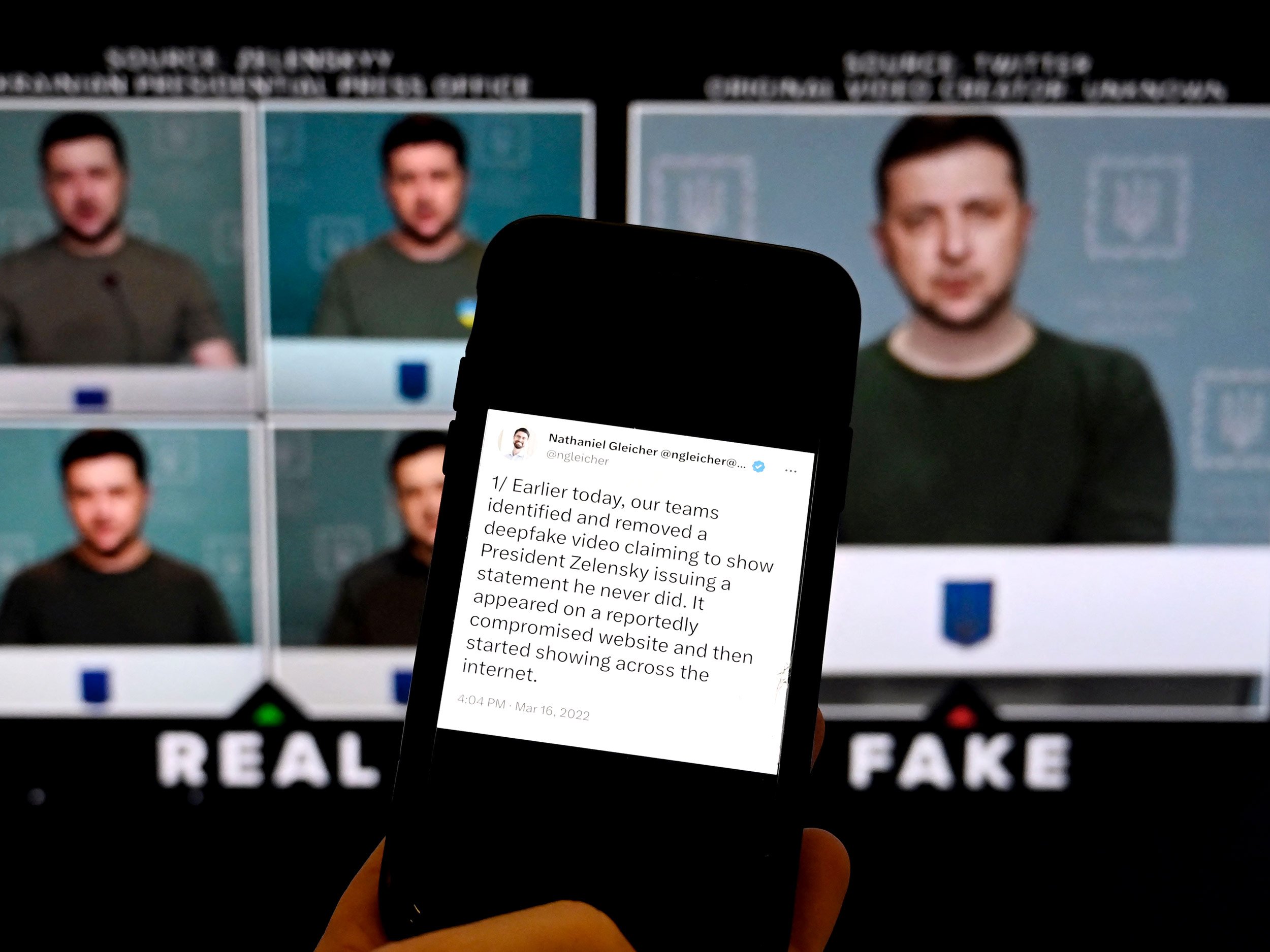

The US government has been targeted by a series of sophisticated scams in which threat actors are using AI-generated deepfake voice messages and texts to impersonate senior officials. The FBI has issued an alert warning of a campaign using smishing (text) and vishing (voice calls) tactics to trick current or former senior US federal or state government officials and their contacts.

The scammers, who have been active since April 2025, use malicious links posing as messaging platform invites to access officials' accounts. Once inside, they exploit contacts to impersonate and extract data or funds. According to the FBI alert, one way the actors gain such access is by sending targeted individuals a malicious link under the guise of transitioning to a separate messaging platform.

“One way the actors gain such access is by sending targeted individuals a malicious link under the guise of transitioning to a separate messaging platform,” reads the alert. “Access to personal or official accounts operated by US officials could be used to target other government officials, or their associates and contacts, by using trusted contact information they obtain.”

Threat actors also use social engineering schemes to acquire contact information, which can then be used to impersonate contacts and elicit information or funds. To avoid falling for AI-powered scams, the FBI advises several precautions: verifying callers' identities using known contact info, checking for slight errors in names, messages, and visuals, and looking for flaws in AI-generated content like unnatural speech or visuals.

Be cautious of realistic fakes using public photos or voice cloning. Always confirm authenticity before responding, and contact security officials or the FBI if uncertain. To avoid fraud or data loss, never share sensitive info with unknown contacts. Verify identity through trusted channels, especially on new platforms. Don’t send money or crypto without confirming requests. Avoid clicking links or downloading files from unverified sources.

Enable two-factor authentication and protect OTP codes. Use a secret word with family to confirm identities and stay secure. The FBI warns that these scammers are becoming increasingly sophisticated, using AI-generated content to build trust and gain access to sensitive information.

Protecting Yourself from Deepfake Scams

The threat of deepfake scams is real, and it's essential to take steps to protect yourself from falling victim. Here are some tips:

- Verify callers' identities using known contact info

- Check for slight errors in names, messages, and visuals

- Look for flaws in AI-generated content like unnatural speech or visuals

- Be cautious of realistic fakes using public photos or voice cloning

- Always confirm authenticity before responding

- Contact security officials or the FBI if uncertain

- Never share sensitive info with unknown contacts

- Verify identity through trusted channels, especially on new platforms

- Don’t send money or crypto without confirming requests

- Avoid clicking links or downloading files from unverified sources

- Enable two-factor authentication and protect OTP codes

- Use a secret word with family to confirm identities and stay secure

Stay Safe Online

Follow me on Twitter: @securityaffairs, Facebook, and Mastodon for the latest news and tips on staying safe online. Together, we can protect ourselves from these sophisticated scams and keep our digital lives secure.