AI-Powered Malware Detection: Successes and Limitations

Malware detection is a critical aspect of cybersecurity, and recent advancements in artificial intelligence (AI) have brought new hope to the field. In this blog post, we will delve into the world of AI-powered malware detection, exploring its successes and limitations. We'll examine a recent benchmarking experiment that used AI agents to detect backdoors in binary executables, and discuss what it means for cybersecurity.

We began by creating a benchmark of finding backdoors in binary executables, without access to source code. We partnered with Michał “Redford” Kowalczyk, reverse engineering expert from Dragon Sector, known for finding malicious code in Polish trains.

The Experiment: Using AI Agents for Malware Detection

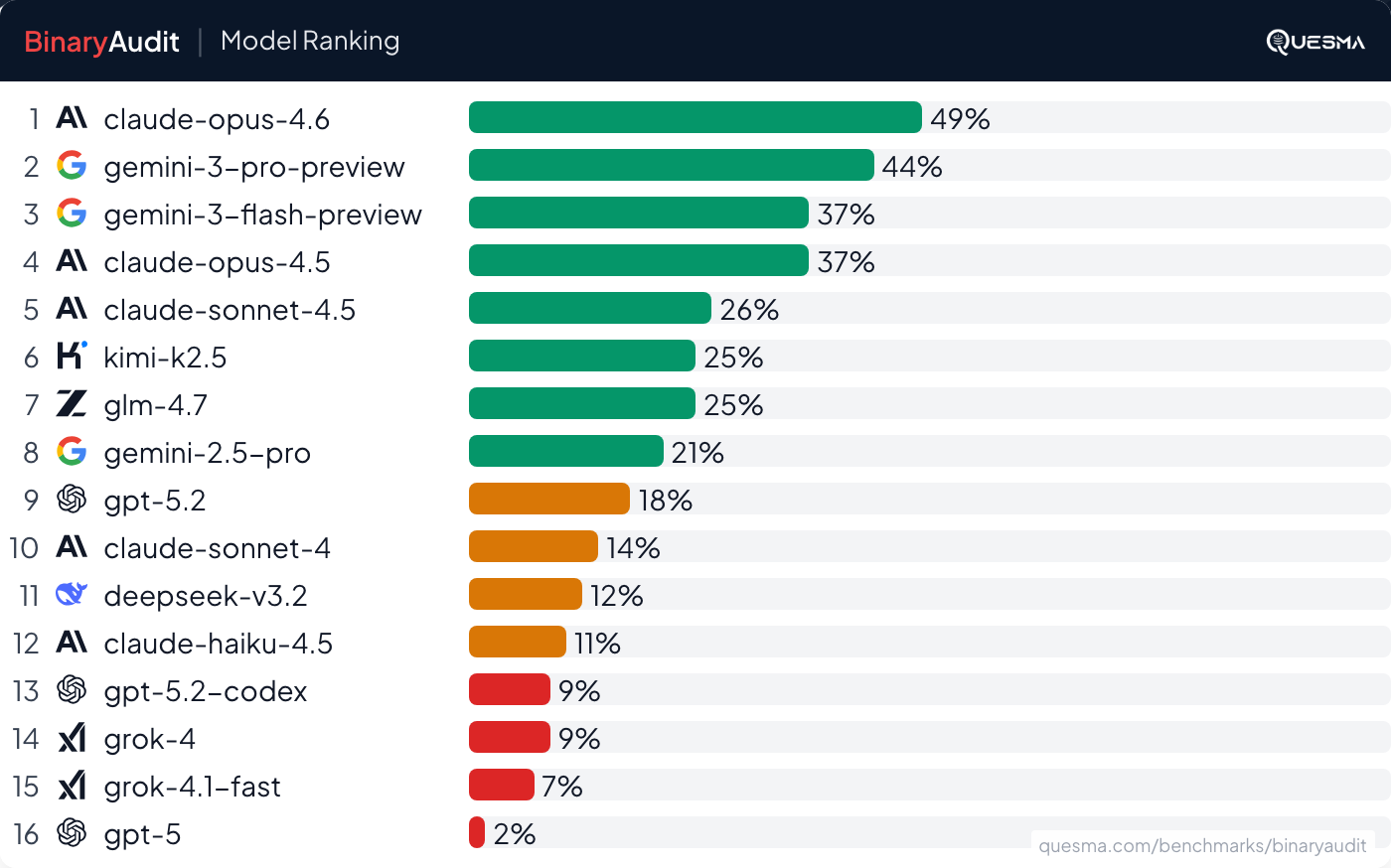

We asked the question: Can AI agents detect backdoors in binary executables? We hid backdoors in ~40MB binaries and asked AI + Ghidra to find them. The results were surprising, with some models detecting hidden backdoors in small/mid-size binaries only 49% of the time. However, most models had a high false positive rate — flagging clean binaries.

Methodology and Tools Used

We used several open-source tools for this experiment, including Ghidra, Radare2, and binutils. We also used AI agents from the quesmaOrg/BinaryAudit project, which includes a range of models such as Claude Opus 4.6 and Gemini 3 Pro.

The Benchmark: Successes and Limitations

We were surprised that today's AI agents can detect some hidden backdoors in binaries. However, this approach is not ready for production. Even the best model, Claude Opus 4.6, found relatively obvious backdoors in small/mid-size binaries only 49% of the time.

Most models had a high false positive rate — flagging clean binaries. We also observed that AI agents can get stuck on legitimate libraries, treating them as suspicious anomalies. This lack of focus leads them down rabbit holes. We observed agents fixating on legitimate libraries while ignoring actual backdoors in a completely different part of the binary.

Conclusion and Future Directions

In conclusion, our experiment shows that AI-powered malware detection has made progress, but it is still far from being reliable. We need to improve the detection rate and reduce false positives to make it a viable end-to-end solution. However, we believe that AI can make it easier for developers to perform initial security audits.

AI can now load binaries, navigate decompiled code, trace data flow, and perform genuine reverse engineering — loading binaries. The whole field of working with binaries becomes accessible to a much wider range of software engineers. We expect that subsequent models will improve drastically once AI demonstrates the capability to solve some tasks.

We also believe that results can be further improved with context engineering (including proper skills or MCP) and access to commercial reverse engineering software (such as IDA Pro and Binary Ninja). Security-sensitive organizations cannot upload proprietary binaries to cloud services, necessitating the use of private, local models for effective defense.