**ZeroDayRAT Spyware Grants Attackers Total Access to Mobile Devices**

A newly discovered commercial mobile spyware toolkit, ZeroDayRAT, has been found to grant full remote access to Android and iOS devices for spying and data theft. This sophisticated tool is being sold on the Telegram messaging platform, rivaling nation-state-built tools in its capabilities.

ZeroDayRAT supports live camera access, keylogging, and the theft of banking and crypto data. The spyware allows attackers to have full control over mobile devices, enabling them to watch users in real-time, steal money, and even hijack clipboard data to replace copied wallet addresses with their own.

According to a report published by iVerify, ZeroDayRAT was first spotted in February 2026. The tool is designed for use on a wide range of phones, requiring no technical expertise from the attacker. Once installed, ZeroDayRAT provides operators with a detailed overview of the device, including the phone model, operating system, battery level, country, SIM and carrier details, app usage, recent activity, and SMS previews.

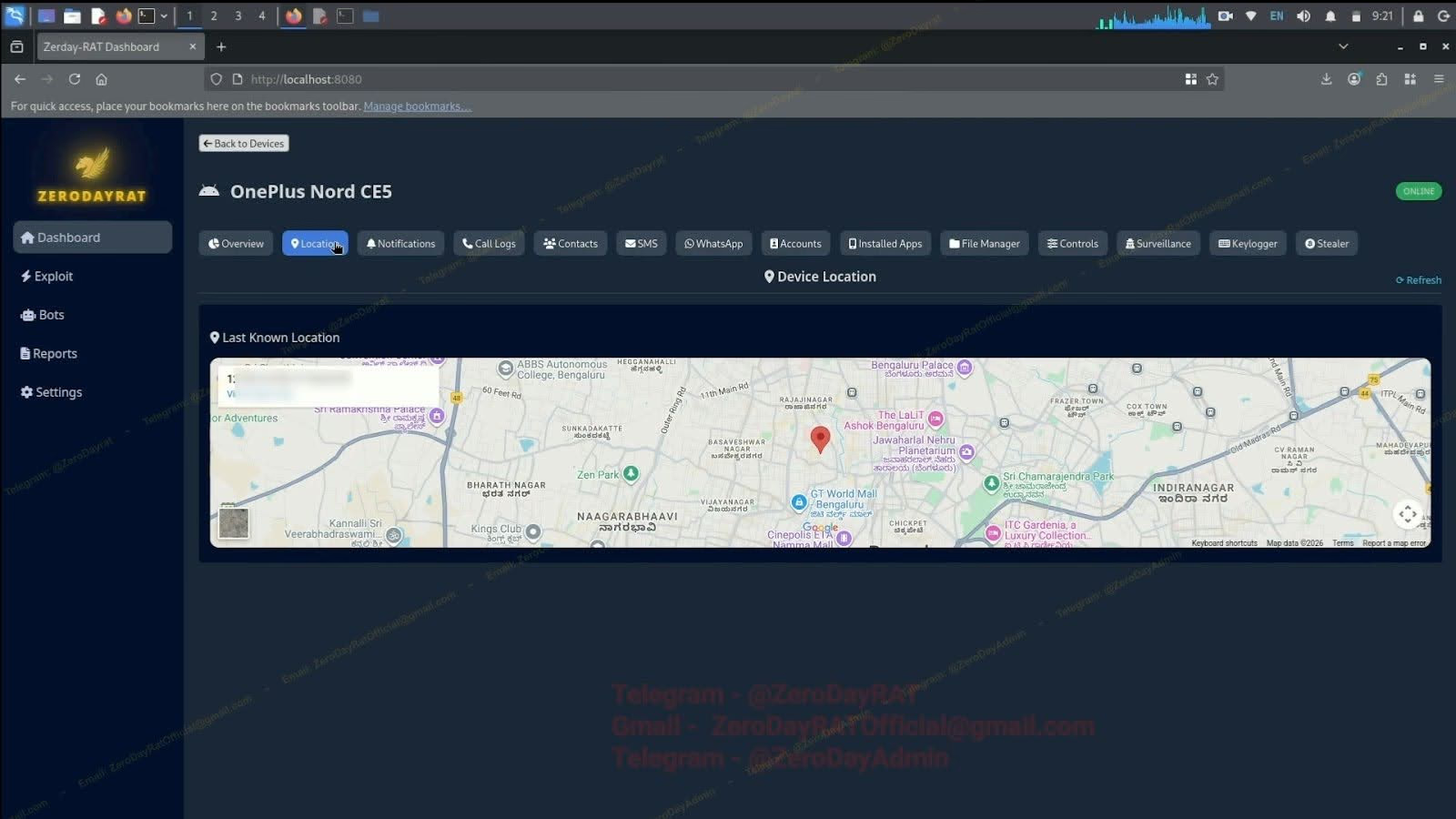

This information allows attackers to profile victims' habits, contacts, and daily activities. From the control panel, operators can track real-time and historical GPS locations on a map, monitor notifications from all apps, and see alerts, messages, and missed calls without opening any app.

A dedicated accounts section lists every service linked to the device, including Google, WhatsApp, Instagram, Facebook, Telegram, Amazon, Flipkart, PhonePe, Paytm, Spotify, and more. This information enables attackers to attempt account takeover or launch targeted social engineering attacks.

ZeroDayRAT also enables live surveillance, not just data collection. From one panel, operators can stream the phone's camera, record the screen, and listen through the microphone in real-time, while tracking the device's location. The tool combines GPS tracking with live camera streaming, screen recording, and a microphone feed, allowing operators to watch, listen to, and locate targets simultaneously.

A built-in keylogger records every action with precise timestamps, including keystrokes, gestures, app launches, and unlocks. A live screen preview lets attackers watch what the victim is doing as it happens. ZeroDayRAT also supports direct financial theft, with a crypto stealer component that scans devices for wallet apps, records wallet IDs and balances, and hijacks clipboard data to replace copied wallet addresses with the attacker's own.

A separate banking module targets mobile banking apps, UPI services, and payment platforms like Apple Pay and PayPal, using overlays to steal login details. From one control panel, operators can attack both crypto assets and traditional financial accounts.

"Taken together, this is a complete mobile compromise toolkit," concludes the report. "The kind that used to require nation-state investment or bespoke exploit development, now sold on Telegram." The report warns of the growing threat posed by ZeroDayRAT, which can be purchased by a single buyer and provides full access to a target's location, messages, finances, camera, microphone, and keystrokes from a browser tab.

Follow me on Twitter: @securityaffairs and Facebook and Mastodon for the latest security news and updates.