**Davos Discussion Mulls How to Keep AI Agents from Running Wild**

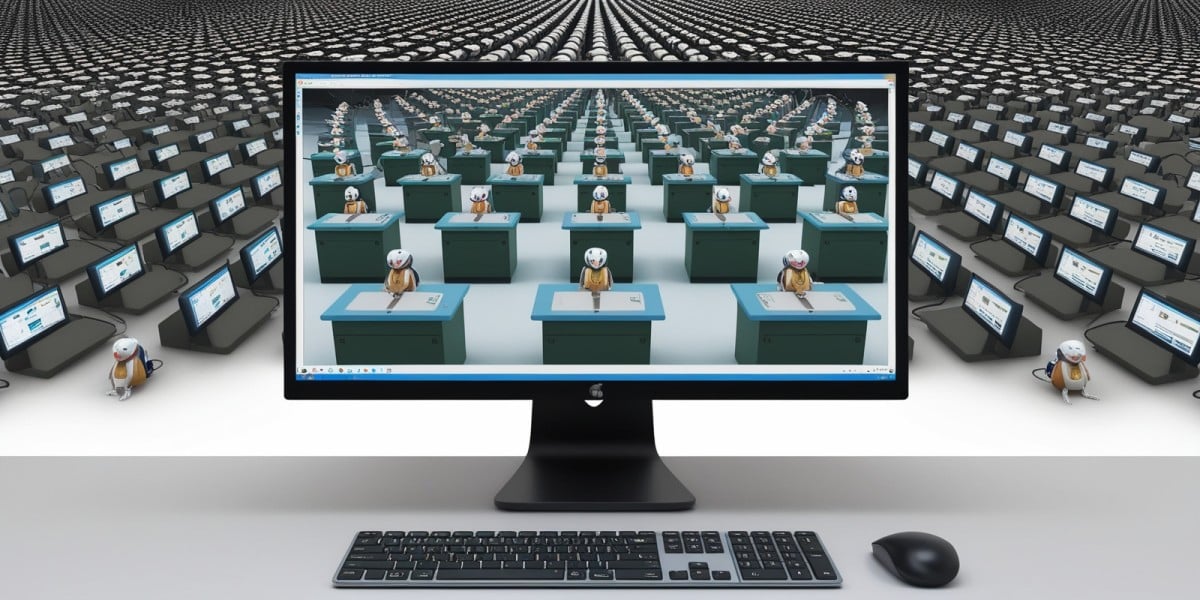

At this year's World Economic Forum in Davos, a panel discussion centered around the growing concern of securing AI agents and preventing them from becoming the ultimate insider threat. The topic sparked a lively debate among experts, with no clear answers yet available.

"We have enough difficulty getting humans trained to be effective at preventing cyberattacks," said Dave Treat, Chief Technology Officer of Pearson, a global education and training company. "Now I've got to do it for humans and agents in combination."

Pearson is introducing AI agents into its environments, but this opens up new challenges for organizations looking to leverage the efficiency gains provided by these agents while preventing them from accessing sensitive data or performing malicious tasks.

AI agents "tend to want to please," Treat explained. "How are we creating and tuning these agents to be suspicious and not be fooled by the same ploys and tactics that humans are fooled with?"

The challenge of securing AI agents is just one aspect of a broader issue related to AI and cybersecurity, including prompt injection. While implementing zero-trust and least-privilege access remains high on the list of best practices, security experts are still searching for effective solutions.

According to Cloudflare co-founder and president Michelle Zatlyn, organizations should treat AI agents as an extension of their team, just like employees. "Organizations are adopting zero trust for their employees," she said. "The same thing will happen with agents."

Hatem Dowidar, group CEO of e&, suggested setting up guardrails and guard agents to monitor the behavior of AI agents in a separate system. "We need to create that also for AI agents," he said. "We need to set up guardrails and have guard agents that are in a separate system that look into how your AI agents are behaving and immediately flagging anything that is going out of the ordinary."

Mastercard CEO Michael Miebach echoed the importance of collecting signals from relevant data streams to determine if activity is safe or malicious. "It comes down to many things," he said. "It could be identity. It could also be your location data. It's many, many data sets that come together with a 99 percent probability score."

As companies continue to grapple with the challenges of securing AI agents and preventing them from becoming insider threats, security firms are capitalizing on the trend by acquiring smaller startups focused on AI solutions. The intersection of AI and cybersecurity is creating new opportunities for innovation and growth.

But as Dowidar noted, "We need more intelligent networks," he said. "We need to continuously monitor for different behaviors. People are using AI capabilities or agents for hacking or for bad actions, we also have agents that are looking at new behavior or different behavior and isolating it early on to be able to protect the network."

As the World Economic Forum in Davos comes to a close, one thing is clear: securing AI agents and preventing them from becoming insider threats will require continued innovation, collaboration, and investment in cybersecurity research.